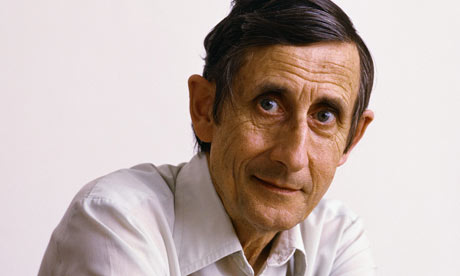

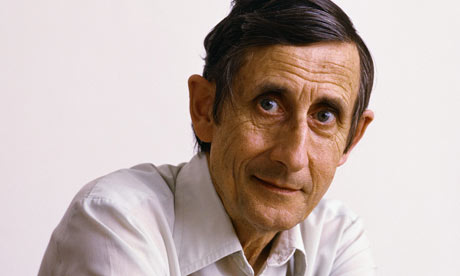

In the mid 1970s one of Britain’s most eminent theoretical physicists, pioneer quantum physicist, pure mathematician, metaphysicist (now residing in New Jersey aged 89) wrote an article called “The Hidden Costs of Saying No”. That man was Freeman Dyson who to this day is one of the most forward thinking scientific engineers to have ever walked this earth. His work in nuclear physics and quantum computing all led to major advances in things that we take for granted today.

In the mid 1970s one of Britain’s most eminent theoretical physicists, pioneer quantum physicist, pure mathematician, metaphysicist (now residing in New Jersey aged 89) wrote an article called “The Hidden Costs of Saying No”. That man was Freeman Dyson who to this day is one of the most forward thinking scientific engineers to have ever walked this earth. His work in nuclear physics and quantum computing all led to major advances in things that we take for granted today.

The paper he penned in 1974 first crept across my desk as a student in the early 1990s and it’s as probably completely relevant today as it was the day it left his typewriter on that spring day in the mid 1970s. At the time I remember reading it as part of work I was doing around environmental technologies and investment in technology. Didn’t think I’d ever be pulling it out again but it’s pertinence to where we are today in Cloud is enormous.

The argument made in the paper essentially argues eloquently that the price we pay for not doing something should be considered carefully during the decision process, I can pull some key phrases out the paper here – and thirty eight years on they still resonate and are applicable to technology processes today.

Freeman writes “it is not enough to count the hidden costs of saying yes to new enterprises. We must also learn to count the hidden costs of saying no. The costs of saying no may be high, although they are often uncertain and intangible. Our existing political processes introduce a strong bias into the consideration of new enterprises”

“We need to know more accurately the costs of saying no, and we need procedures that allow a more realistic weighing of uncertainties when knowledge is lacking.”

In a nutshell Freeman described a lot of the things we take for granted working using Open Source methodologies and technologies. Daily development routines and complex decision making that is sped up by the adoption of combined global sharing of ever changing but singularly stable code trees. That code polished and brought to an enterprise market by Red Hat. Saying no to established costly proprietary ways of working that tie us into vendor lock in but also slow down the establishment and the stabilisation of future technology advancement.

In Cloud we have tangible decisions to take and those decisions impact on our core existing infrastructures as much as they do on our future road maps both for technology advancement and technology adoption. This also often the shapes of our businesses as we grow them either by harnessing more intelligent open ways of working. I am fortunate in working with some of the best and brightest from MIT and Dartmouth. I can count in my Rolodex (ok so artistic licence my email address book) some of the most capable technologists on the planet. We all, to a man (or woman) have grown careers and fostered approaches to our working days by sharing. We are daily creating pathways openly and in a community that stands up to be counted. In Cloud it’s no different.

The practical advancements in everything from storage to provisioning, from application deployment to lifecycle management rely on constant re-evaluation of process and often political dogma. We challenge regularly the recognised established old school ways of working in software environments to both satisfy the ever growing needs of our customerbase but also because as fast as we release stable supported code, the underlying network and physical hardware we rely on to pump those ones and zeroes changes. This then affords us new highways to motor down to deliver our cargoes certifying RHEL / RHEV / JBoss / Gluster in a supported release on these latest greatest technology environments. Honing, performance testing, securing and documenting to provide stable and polished deployable environments.

If we look at Platform as a Service, PaaS, a decade ago the decisions needed to get an application into a live environment to be consumed both internally and externally. This would have gone through a whole selection of change controls and also interpersonal relationships and decision making circuits within an organisation. All time consuming.

So we’re talking 2000-2, web based application development wasn’t in it’s infancy but the role of DevOps vs ITOps was a lot less equal than it was now. Silo’d mentality meant disproportionate decision making was often weighed to the advantage of the greater good rather than advancement. Open Cloud affords us now in 2012 the ability to utilise the best our developers can deliver and get that out in a safe and supportable manner and to do it using a range of tools, languages and libraries like never before – and to share this globally.

I truly believe the last proprietary technologies we will see in the datacentre are VMWare and to a lesser much smaller extent (because of current adoption levels) Microsoft’s HyperV cloud technology. While you could see that as a contentious statement the law of diminishing returns dictates that there is less available funding for IT projects globally, that our masters and our consumers are more savvy and expect more for their pound, euro, dollar, rupee, yen etc. To critically be able to do more with less headcount to be able to maintain what we have but to get to the next level with regards to being able to harness and deliver against business need.

The only way you can do that is openly.

The only way you can do that if you understand real world sane economics at a processor core level or at the application development and management level is openly. To therefore sink your investment into a proprietary core product and try and then stretch your IT architectures around something that makes you fit around it not you work to best advantage holds no credible place in the long term procurement strategy of the savvy CIO.

I was at VMWorld in 2012 in Barcelona. I probably will be blackballed for writing this and not get invited back but it reminded me of the same sort of protective over arching ethos of the Windows shows circa 2000-2001. When BackOffice was most at its hyped. BackOffice was great for those who needed a GUI to provision a file and print environment – to stand up a SQL database, to provision a mail system. It was the defacto go to environment of choice for those that counted their technical staff’s prowess by the number of trained staff who could click a mouse and read event viewer without falling into a coma. It was point and click enterprise computing at it’s most basic supplemented by “developers” who using Visual Basic and Visual Studio took runtimes and libraries of precompiled and often MSDN sourced libraries in order to get applications and databases to work. It wasn’t cutting edge. It wasn’t innovative and it’s a reason many of these organisations and system integrators got left behind both in growth and revenue by the more savvy tech startups who went Open and used code from Red Hat and the Open Source community.

For the companies that adopted an open strategy (the companies that have become the dot.com darlings and you rely on daily) they used Linux. They used Samba, they used Apache, they used Exim/Sendmail/Postfix for their mail as they spent money on people and research rather than on per seat licence or per mailbox licencing. They didn’t use Microsoft SQL or Oracle they just used MySQL or Postgres. The very rate they needed to develop using a paid for access model would have broken them but also the technology sucked (a lot) both from a performance perspective but also because you couldn’t get under the hood and tinker. They also contributed back – sharing information and sharing modifications for the common good. They challenged the hidden costs of saying no by embracing opportunity cost and common technical challenges rather than signing a EULA and waiting for the next MSDN CD box to arrive in the post.

The forward thinking companies who became the backbone of the internet relied on Linux. Not Solaris, not SCO, not Microsoft Windows NT or BackOffice. They deployed at speed and they were able to ride on the back of the speed of advancement of development environments such as PHP, Perl, Python, Java etc to get things done and to get things done stably and openly. These are the companies now with the banked revenue with the earnings figures and the technologies we consume (and Red Hat more often provide the support and provide the backbone to achieve stable platforms to base these technologies).

In Cloud we do the EXACT same thing, we build using what we have and we are brave enough to ask questions to understand what we have, and to understand what we need. We change the dynamic of IT by being brave enough to follow the example of Freeman Dyson.

In 1974 as part of this paper Dyson stated “Technology has always been, and always will be, unpredictable. Whenever things seem to be moving smoothly along a predictable path, some unexpected twist changes the rules of the game and makes the old predictions irrelevant.” A more visionary statement you are less likely to find in any management textbook or MBA guidance.

And for those who try to challenge and control or dictate privacy regulation or to impose territory or sovereign specific controls on Cloud. Beware, Freeman Dyson saw you coming nearly forty years ago and cleverly ties in William Blake’s writing he states with such punch that our elected officials in the EU and in other countries should take heed from:

“The other lesson that we have to learn is that bureaucratic regulation has a killing effect on all creative endeavor. No matter how wisely framed and well intentioned, legal formalities tend to become inflexible. Procedures designed to fit one situation are applied indiscriminately to others. Regulations, whose purpose was to count the cost of saying yes to an unsound project, have the unintended effect of saying no to all projects that do not fit snugly into the bureaucratic system. Inventive spirits rebel against such rules and leave the leadership of technology to the uninventive. These are the hidden costs of saying no. To mitigate such costs, lawyers and legislators should carry in their hearts the other lesson that Blake has taught us: “One Law for the Lion and Ox is Oppression.”

Freeman Dyson didn’t invent Open technologies but he does talk a lot of sense. At Red Hat we like to think that the paradigm shift that is leading us to Cloud has a backbone that is empowered by open creative technological folk wearing red fedoras that want to understand how you can get to Cloud today, securely safely and empowering you to be able to answer the difficult question you may face when positioning open technologies vs proprietary stacks. How much does it cost to say no ? After reading this article you should be able to answer a lot of that question yourself. Working with Red Hat we’ll help you get the rest of those answers using the knowledge we’ve worked on over the last thirteen years in the enterprise marketplace.

I was also at GigaOM Structure in Amsterdam recently and Brian Stevens from Red Hat sat on stage doing a fireside chat and was asked the question whether Github was the new Linux ? The question made me giggle nervously as Linux is Linux – there was no polished answer but it relies on a company such as Red Hat and a thought leader such as Brian, Paul Cormier or Jim Whitehurst to continue to prove that our work and our mission statement shares the same punch as Freeman Dyson’s almost prophetical paper did way back in 1974.

The same level of expectation around our support of OpenStack being critical for open hybrid cloud aligned with our proven Red Hat stack and we are doing it openly and transparently as Platinum members of the OpenStack Foundation. You can get the latest Folsom technology preview by clicking here for RHEL users. Challenging to say no to proprietary working methodologies, aiding and maturing OpenStack and the whole Cloud paradigm.

There’s one future-proof cloud and it’s open

Happy Thanksgiving, thanks for reading. With grateful thanks to Freeman Dyson one of the greatest technologists in the modern world. The original Sheldon Cooper.

Download the podcast here in MP3 format only

Download the podcast here in MP3 format only

You must be logged in to post a comment.